In January 2011, FDA published its long-awaited guidance for industry on Process Validation: General Principles and Practices [1].This replaces the previous 1987 version (updated 2004) and it is understandably a big change for some whose whole career has been dominated by the previous approach. The question is: why was a change needed and how drastic is it?

The need for a new approach

In the 1970s, a series of contamination issues in large-volume parenteral bottles led regulatory authorities and manufacturers to focus more on process understanding and quality assurance. The idea that finished product testing was not enough to assure product quality began to grow. Industry needed a better system to determine whether a product was ‘good’ [2].

In 1987, at the request of industry, FDA published its original guideline on General Principles of Validation.

Industry wants assurance that its products are manufactured to a certain level of quality. However, how is that assurance to be provided? How can industry design to assure these qualities? One way is through direct observation, another is through prediction. Direct observation may require the destruction of the products, therefore industry generally must use predictive methods to determine, with a certain level of confidence, that its processes and controls are satisfactory.

As manufacturing progressed to biopharmaceuticals it became obvious that such things as cell growth mechanisms and purification processes were relatively poorly understood. Apparently small changes to the manufacturing process, for example, a 5% adjustment to the speed of the impeller in a bioreactor had significant impact on final product quality. In such an environment, the focus for engineers and scientists was to create a repeatable process that manufactured consistent products which could then be validated using clinical trials.

Such an approach has been very successful in licensing the first generation of biotherapeutics, but in the last decade there has been a realisation that the processes borne from this philosophy are quite rigid and inflexible. Cash-to-cash cycle times (a measure of how long it takes a product to move through the supply chain) are three times greater than any other industry and dominated by quality release times, which may comprise over 90% of the overall time to produce a batch of material. This ties up hundreds of millions of dollars in inventory, and more importantly discourages innovation. The approach also discourages a fundamental understanding of the link between quality attributes and process parameters, which can reduce the ability for a biomanufacturer to respond quickly when faced with inevitable adverse events such as contamination.

The new Quality by Design and ICH Q8, 9 and 10 guidelines are designed to manage risk better in the manufacturing process. The objectives of these guidelines are to achieve a high quality product on a consistent basis, establish and maintain a state of control, develop effective monitoring of product performance, do statistical process control and capability analyses and meet the important goal of facilitating continuous improvement.

Spot the difference

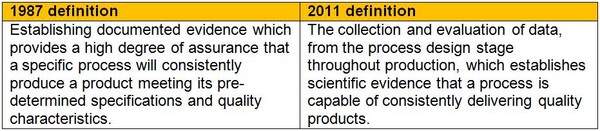

The 2011 guidance has updated the definition and shifted the focus from documentation to ‘scientific evidence’ throughout the product life cycle, see Table 1.

Table 1: General principles of validation definitions

In the past, process validation emphasis has been on collecting large quantities of data from validation batches, leading to a perception of process validation as largely a documentation exercise. The updated approach requires the manufacturer to collect data throughout the product life cycle and evaluate it for scientific evidence that it supports a quality process [3].

What is required today

Gone is the certainty that good documentation is everything. Now, FDA expects industry to consider process validation as a scientific endeavour. Continuous improvement is used during the manufacturing process to iteratively focus attention on key risks to the organisation and correct them [4].

Together, the manufacturing and process improvement cycles provide concrete ways of designing and operating better biopharmaceutical production processes that deliver on the promise of better quality and lower compliance costs to manufacturers. Central to this effort is collecting and understanding process data in the facility.

Statistical process control is a good means of tracking critical process parameters and quality attributes, but does not on its own allow the identification of critical manufacturing process parameters. What is needed is a means of correlating the hundreds of potential process parameters, with any number of possible quality attributes, or even early indicators of quality. Such data-informed decision making is an important supplement to risk-based assessments that are done as part of ICH Q9 activities.

Conclusion

In summary, the current good manufacturing practice regulations require that manufacturing processes be designed and controlled to assure that in-process materials and the finished product meet predetermined quality requirements and do so consistently and reliably. This requires the intelligent use of sophisticated statistics.

Related articles

Quality by design for generics by 2013

FDA and EMA to join forces on quality

References

1. FDA, Guidance for industry: process validation: general principles and practices. January 2011.

2. Long M, Baseman H, Henkels WD. FDA's New Process Validation Guidance: Industry Reaction, Questions, and Challenges. Pharmaceutical Technology. 2011;35:s16-s23.

3. Pharmout. FDA Guidance for Industry Update - Process Validation. 2009.

4. Bioproduction Group. Quality By Design in Biomanufacturing. [cited 2012 Jan 27]. Available from: www.bio-g.com/

0

0

Post your comment